It’s good to be back.

I decided to take a summer holiday, of sorts, retiring myself from the pressure of writing these articles. If you know me, you’ll recall that I’ve pretty much not had a holiday since I started my professional life—much to the consternation of my family. So I decided to take a little time for myself this year. Being that this year has been, er, rather unusual to say the least, I thought this would be the perfect opportunity.

These articles are a labour of love and earn me absolutely nothing in monetary terms, so I have to work at the same time to earn a living in my day job, putting pressure on the time I have for this writing. I really enjoy the writing and hope to make it a significant part of my professional life in the near future.

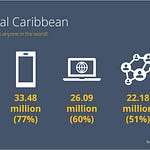

Speaking of which, I have a small favour to ask of you, my dear readers. I’m running a small study about the ICT industry in the eastern Caribbean and have concocted a short survey to give me an overview of the market. If only half of you respond, I’ll be well on my way to having useful data to work with. I’m sure you can be that half :)

It’s not all one-way either. The better the data and the more data I have, the more I’ll write about the results here directly to your inboxes. You give, I give. What could be fairer?

You can take the survey here:

Thanks for your help.

Disrupting Intel

Last year I wrote about Intel’s intention to ignore the threat of disruption to its core business if it continued to follow, virtually to the letter, Clayton Christensen’s Disruption Theory. From that article:

If we follow DT to its conclusion, it is possible to see the risks Intel poses for itself, namely being innovated out of business. I’m clearly not suggesting that Intel will fail next year, but I think the long-term future is at risk if there is not some kind of reaction, with Intel creating further opportunities.

I wrote at the time, that the fact that Intel was concentrating on moving further up the stack to increasingly more profitable zones, avoiding the threat of the lower-end processor makers like AMD, Pohoiki Beach was designed to ensure Intel’s prosperous future.

It was a good strategy on the face of it. Desktop and laptop chips were increasingly under better-than-ever competition, something that was not the case when Intel was in its heyday. The real threat, Advanced RISC Machines’ ARM designs, were only beginning to poke their head out from the development studios, and whilst they had ambitions of capturing a small percentage of the market (10% if I recall well), this together with AMD provided real pressure on Intel. Intel had to react, and it did by going upscale and upping margins on those products because of reduced unit numbers.

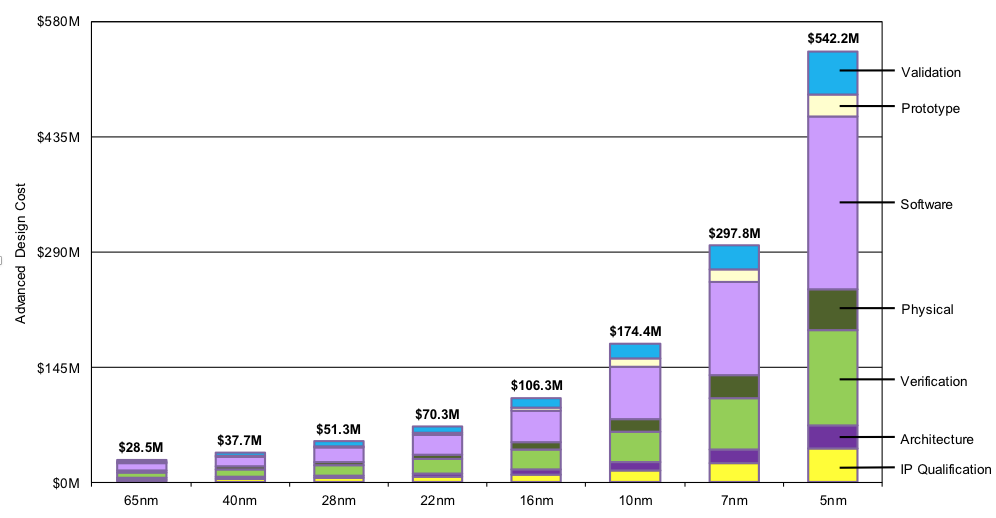

The thing many people don’t understand is just how phenomenally expensive it is to design a CPU. It takes months of research and prototyping, and each iteration and innovation adds substantially to those costs. As CPUs are designed using smaller and smaller transistor sizes, costs go the other way, and exponentially. Costs of design are often dwarfed by the costs of tooling too. Tooling is the process of the building and bringing online fabrication plants to build the processors. Marketing is another expensive cost centre. Intel has famously pilled millions into elaborate marketing campaigns to get the public to think that laptop chips only come from them.

Other factors influence the costs too. It should be noted that CPUs are defined not only by their speed —something that has mostly been maximised today, in that we can’t get the electrons to move any faster or for long periods without breaking the silicon— but are now defined by the transistor size in nanometers, or nm. When you look at processor specifications, they will talk of 14nm, 10nm and smaller. Looking at the following chart from International Business Strategies will give you an idea of the estimated costs, and how they have multiplied as semiconductors have reduced in size:

Source: IBS

In the beginning, when it was a simple arms race of raw processor speeds, Moore’s law —i.e., the number of transistors on a dye will double every 18 months or so— meant that Intel could produce faster and faster chips for their target markets, namely desktop and server devices. The server-specific chips came further down the road after Intel saw the opportunity to custom-design and build what was essentially desktop-class chip to supply a burgeoning market of businesses that saw the need to store documents and applications centrally. It followed the second-level of the Digital Transformation model I wrote about in Issue 4: The Digital Transformation model in detail:

Internal Exploitation

The second level, Internal Exploitation, is defined by the process in which organisation attempt to integrate the different silos of information systems and datasets with the aim to produce a ‘whole’. Integration is difficult, slow and often results in failures when starting out from a base that is not adapted to integration. Just how do you get the Accounts, Stock, HR, Sales systems integrated?

There are two types of integration, technical and business process interdependence. According to the model most enterprises spend more time on integrating on a technical level than on the business processes.

Since then, the battle has become more technical and has required close coordination between the designers wants and the builders’ capabilities. So far Intel has been outpaced by the likes of TSMC in reducing the size of its transistors who have become world leaders in producing the most densely packed systems-on-a-chip. TSMC is not the only one either, Qualcomm and a couple of others are also at the forefront in the production of ever-tinier devices year in, year out.

The keen-eyed among you will note that I switched from talking about CPUs and processors to systems-on-a-chip (SoCs, pronounced Socks). That is where the most prominent battleground is playing out currently. Not on pure CPUs but on chips that contain several previously separate ancillary systems on the same dye. Graphics, memory and other components are being reduced in size and brought physically closer to the processing units. In these minute devices, even a fraction of a millimetre can wield significant gains in data-exchange, or processing.

Intel seems to be having trouble developing and manufacturing smaller transistors reliably, which in part, explains the reason for multi-core and multi-processor CPU designs from them. Their designs don’t need to be too concerned with size, power and heat dissipation requirements. A desktop or a server is plugged into an infinite power source for all intents and purposes, and the cooling systems put in place in server rooms or the space in an office affords all the heat sink required to ensure stable operation.

Since the beginning, Intel took the responsibility to design, make, market and ship the chips to PC and Server makers. This vertically integrated strategy served them well, so well in fact, that they became the de facto leader in the world for processors. Remember Intel Inside? But as recent news highlights, something that is a traditional force for an organisation can be turned into a weakness when disruption theory is well understood and utilised by competitors.

Intel has now shown that it is nearly a whole generation, or “node” as it is known in the industry, behind TSMC. As a result, they have stated that they are going to outsource some of their production to... none other than the company outpacing Intel in chip building. TSMC of course. From the FT:

“To make up for the delay, Intel said it was considering turning to outside manufacturers from 2023 onwards for some of its production — a plan that appeared to leave little option but to subcontract its most advanced manufacturing to TSMC. The shift raised the prospect of a change in Intel’s business model, according to some analysts, forcing it to consider abandoning more of its manufacturing to focus on design.”

Classic disruption theory!

Cementing TSMCs lead in processor manufacturing is another piece of news that may send shockwaves around the industry. No doubt most of you heard the long-awaited news that Apple, following a successful transition from PowerPC processors to Intel processors years ago, announced their intention to move its entire line of Macs to its in-house designed A-series processors. The same family of processors that power their iPhones and iPads and probably countless other items in their inventory.

These SoCs are currently world leaders in speed, power and cooling capabilities. For reference, the release of the iPhone 11 and iPad Pro showed that Apple-designed processors were faster than most of the Intel and AMD processors found in laptops of the day. Apple has successfully designed its processors using a small, relatively unknown player in the CPU market to produce a succession of world-beaters. That partner is ARM or Advanced RISC Machines. If you’re interested I could bore you with the in’s and out’s of the different philosophies of instruction sets in the CPUs — The RISC bit stands for Reduced Instruction Set Chip. They are all built by TSMC.

Once Intel lets go of this market, it will be all but impossible to get it back in the future. Don’t worry, they’re not going out of business anytime soon, and will undoubtedly record higher profits and better margins in the future as they shift their production away from a market that looks set to transition lock, stock and barrel over to ARM-designed processors and SoCs. Intel’s own mobile-focused processors are starting to get better, but it is too little too late in my opinion.

Blockchain, Schmockchain

Regular readers will know that I have been mostly sceptical of the utility of Blockchain in its potential to change the world. It is currently a big, slow database that complicates things rather than simplifying them. From Blockchain ≠ Cryptocurrency, I said :

... that it is a huge energy consumer and hence by definition is inefficient. That, sadly, is not its only efficiency problem. Blockchain is actually extremely limited in its speed and quantity of transactions and scales poorly. So much so that in 2016 several banks exploring the possibility of using the technology in the personal and business banking sector abandoned the work as blockchain was just too slow.

According to a detailed academic-style “peer-reviewed” study by the Centre for Evidence-Based Blockchain and reported in the FT:

... outside of cryptoland, where blockchain does actually have a purpose insofar as it allows people to pay each other in strings of 1s and 0s without an intermediary, we have never seen any evidence that it actually does anything, or makes anything better. Often, it seems to make things a whole lot worse.

Worse, the report repeatedly highlights that the technology is a solution currently looking for a problem. The antithesis to the Jobs to be Done theory that helps us better design and provide solutions. With over 55% of projects showing no evidence of useful outcomes, over 45% showing “unfiltered evidence” (i.e., next to worthless), it would appear that Blockchain is a belief-system rather than a technological solution.

The Future is Digital Newsletter is intended for anyone interested in Digital Technologies and how it affects their business. I’d really appreciate it if you would share it to those in your network.

If this email was forwarded to you, I’d love to see you on board. You can sign up here:

Visit the website to read all the archives. And don’t forget, you can comment on the article and my writing here:

Thanks for being a supporter, have a great day.